Atomic velero snapshots with longhorn

🗓️ Date: 2025-01-13 · 🗺️ Word count: 1249 · ⏱️ Reading time: 6 MinuteLonghorn’s powerful volume snapshotting feature can be integrated with kubernetes’ VolumeSnapshots CRDs and used by velero to create consistent volume backups.

In this tutorial, velero will be installed and configured in a cluster with longhorn. Kubernetes manifests backups and disk snapshots will be stored in an external object storage bucket, on Oracle Cloud. Furthermore, a backup and restore will be created to demonstrate the integration workflow.

Prerequisites

- longhorn installed in the k8s cluster

- velero not yet installed in the k8s cluster

- velero cli installed in the local machine

- Oracle Cloud tenancy

- Oracle Cloud bucket

- Oracle Cloud user key for object storage

The Oracle Cloud user key creation procedure is shown in this previous blog post.

Steps

- Configure longhorn backups with the external bucket target.

This involves creating a secret in the longhorn-system namespace with the bucket connection string and access credentials.

Then, the secret name must be configured in longhorn’s web ui along with the connection string.

These two settings can also be configured via the helm chart values.

As an example:

defaultSettings:

backupTarget: s3://bucket-lab-k3s-velero-demo@eu-zurich-1/longhorn

backupTargetCredentialSecret: oracle-cloud-object-storage-bucket-backup

For the full procedure, see this previous blog post.

Create a manual backup of a volume to test if everything is working. Check also the bucket content, for instance via S3Drive or WinSCP.

- Install the kubernetes VolumeSnaphot CRDs and controller.

Save the file below as kustomization.yaml and create all the resources defined in it with kubectl apply -k ./kustomization.yaml.

Important: Before applying the file, check the CRDs and controller version’s compatibility with longhorn, in the official documentation.

Currently, longhorn v1.7.2 supports CRDs and controller version v7.0.2.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: kube-system

resources:

- https://github.com/kubernetes-csi/external-snapshotter/client/config/crd/?ref=v7.0.2

- https://github.com/kubernetes-csi/external-snapshotter/deploy/kubernetes/snapshot-controller/?ref=v7.0.2

Check if the resources have been created:

kubectl get crds | grep volumesnapshot

volumesnapshotclasses.snapshot.storage.k8s.io 2025-01-11T12:45:01Z

volumesnapshotcontents.snapshot.storage.k8s.io 2025-01-11T12:45:01Z

volumesnapshotlocations.velero.io 2025-01-11T12:57:59Z

volumesnapshots.snapshot.storage.k8s.io 2025-01-11T12:45:01Z

kubectl get pods -n kube-system -l app.kubernetes.io/name=snapshot-controller

NAME READY STATUS RESTARTS AGE

snapshot-controller-665d46dc8f-bcxjr 1/1 Running 1 (19m ago) 45h

snapshot-controller-665d46dc8f-gvktb 1/1 Running 1 (20m ago) 45h

- Create the

VolumeSnapshotClassresources.

Three VolumeSnapshotClass resources must be created:

- one that creates longhorn snapshots (

type: snap), - one that creates longhorn backups (

type: bak), - one to be used by velero, with its annotation (

type: bak).

The velero class sets deletionPolicy: Retain in order to keep the backup resource in case of accidental deletion of the namespace with the VolumeSnapshot resource.

The label velero.io/csi-volumesnapshot-class: "true" on the velero class is necessary to make velero use this class.

volume_snapshotclass_snap.yaml:

# CSI VolumeSnapshot Associated With Longhorn Snapshot

kind: VolumeSnapshotClass

apiVersion: snapshot.storage.k8s.io/v1

metadata:

name: longhorn-snapshot-vsc

driver: driver.longhorn.io

deletionPolicy: Delete

parameters:

type: snap

volume_snapshotclass_bak.yaml:

# CSI VolumeSnapshot Associated With Longhorn Backup

kind: VolumeSnapshotClass

apiVersion: snapshot.storage.k8s.io/v1

metadata:

name: longhorn-backup-vsc

driver: driver.longhorn.io

deletionPolicy: Delete

parameters:

type: bak

volume_snapshotclass_velero.yaml:

# CSI VolumeSnapshot Associated With Longhorn Backup

kind: VolumeSnapshotClass

apiVersion: snapshot.storage.k8s.io/v1

metadata:

name: velero-longhorn-backup-vsc

labels:

velero.io/csi-volumesnapshot-class: "true"

driver: driver.longhorn.io

deletionPolicy: Retain

parameters:

type: bak

kubectl apply \

-f volume_snapshotclass_snap.yaml \

-f volume_snapshotclass_bak.yaml \

-f volume_snapshotclass_velero.yaml

- Install velero via helm chart.

Add the velero chart and install it with the values below. Customize the values file as needed.

helm repo add vmware-tanzu https://vmware-tanzu.github.io/helm-charts

helm repo update

helm install velero vmware-tanzu/velero --create-namespace --namespace velero -f values.yml

values.yaml:

# AWS backend plugin configuration

initContainers:

- name: velero-plugin-for-aws

# use version v1.0.0 for Oracle Cloud compatibility

image: velero/velero-plugin-for-aws:v1.0.0

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /target

name: plugins

# Object storage configuration

configuration:

backupStorageLocation:

- provider: aws

bucket: [OCI_BUCKET_NAME]

prefix: velero

config:

region: [OCI_REGION]

s3ForcePathStyle: true

s3Url: https://[OCI_STORAGE_NAMESPACE].compat.objectstorage.[OCI_REGION].oraclecloud.com

insecureSkipTLSVerify: true

# Enable CSI snapshot support

features: EnableCSI

credentials:

secretContents:

cloud: |

[default]

aws_access_key_id: [OCI_KEY_ID]

aws_secret_access_key: [OCI_KEY_SECRET]

# Disable VolumeSnapshotLocation CRD. It is not needed for CSI integration

snapshotsEnabled: false

Test

Setup info

- velero is configured to store the backup of the k8s manifests in the

velerobucket path prefix - longhorn is configured to store the PV backups in the

longhornbucket path prefix - the same bucket is used for both type of resources

Steps

-

Create a pod with a PVC attachment. See this previous blog post for an example using postgres.

-

Create a backup of the namespace using velero

velero backup create postgres0 --include-namespaces=postgres

Check the details:

velero backup describe postgres0 --details

Name: postgres0

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/resource-timeout=10m0s

velero.io/source-cluster-k8s-gitversion=v1.31.4+k3s1

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=31

Phase: Completed

Namespaces:

Included: postgres

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Or label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

Snapshot Move Data: false

Data Mover: velero

TTL: 720h0m0s

CSISnapshotTimeout: 10m0s

ItemOperationTimeout: 4h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2025-01-13 12:45:37 +0100 CET

Completed: 2025-01-13 12:45:55 +0100 CET

Expiration: 2025-02-12 12:45:36 +0100 CET

Total items to be backed up: 22

Items backed up: 22

Backup Item Operations:

Operation for volumesnapshots.snapshot.storage.k8s.io postgres/velero-data-my-postgresql-0-r9fr9:

Backup Item Action Plugin: velero.io/csi-volumesnapshot-backupper

Operation ID: postgres/velero-data-my-postgresql-0-r9fr9/2025-01-13T11:45:53Z

Items to Update:

volumesnapshots.snapshot.storage.k8s.io postgres/velero-data-my-postgresql-0-r9fr9

volumesnapshotcontents.snapshot.storage.k8s.io /snapcontent-3337c7ae-ead4-488a-b095-51b2accf0ae8

Phase: Completed

Created: 2025-01-13 12:45:53 +0100 CET

Started: 2025-01-13 12:45:53 +0100 CET

Updated: 2025-01-13 12:45:53 +0100 CET

Resource List:

apps/v1/ControllerRevision:

- postgres/my-postgresql-7bd6c56b94

apps/v1/StatefulSet:

- postgres/my-postgresql

discovery.k8s.io/v1/EndpointSlice:

- postgres/my-postgresql-h8xt5

- postgres/my-postgresql-hl-ztr9p

networking.k8s.io/v1/NetworkPolicy:

- postgres/my-postgresql

policy/v1/PodDisruptionBudget:

- postgres/my-postgresql

snapshot.storage.k8s.io/v1/VolumeSnapshot:

- postgres/velero-data-my-postgresql-0-r9fr9

snapshot.storage.k8s.io/v1/VolumeSnapshotClass:

- velero-longhorn-backup-vsc

snapshot.storage.k8s.io/v1/VolumeSnapshotContent:

- snapcontent-3337c7ae-ead4-488a-b095-51b2accf0ae8

v1/ConfigMap:

- postgres/kube-root-ca.crt

v1/Endpoints:

- postgres/my-postgresql

- postgres/my-postgresql-hl

v1/Namespace:

- postgres

v1/PersistentVolume:

- pvc-972b268c-9713-4c43-8948-4d8daa1a4ef7

v1/PersistentVolumeClaim:

- postgres/data-my-postgresql-0

v1/Pod:

- postgres/my-postgresql-0

v1/Secret:

- postgres/my-postgresql

- postgres/sh.helm.release.v1.my-postgresql.v1

v1/Service:

- postgres/my-postgresql

- postgres/my-postgresql-hl

v1/ServiceAccount:

- postgres/default

- postgres/my-postgresql

Backup Volumes:

Velero-Native Snapshots: <none included>

CSI Snapshots:

postgres/data-my-postgresql-0:

Snapshot:

Operation ID: postgres/velero-data-my-postgresql-0-r9fr9/2025-01-13T11:45:53Z

Snapshot Content Name: snapcontent-3337c7ae-ead4-488a-b095-51b2accf0ae8

Storage Snapshot ID: bak://pvc-972b268c-9713-4c43-8948-4d8daa1a4ef7/backup-67ddf0685e5a4e55

Snapshot Size (bytes): 209715200

CSI Driver: driver.longhorn.io

Result: succeeded

Pod Volume Backups: <none included>

HooksAttempted: 0

HooksFailed: 0

Check the content of the bucket: new files should have been placed in the velero and longhorn prefixes.

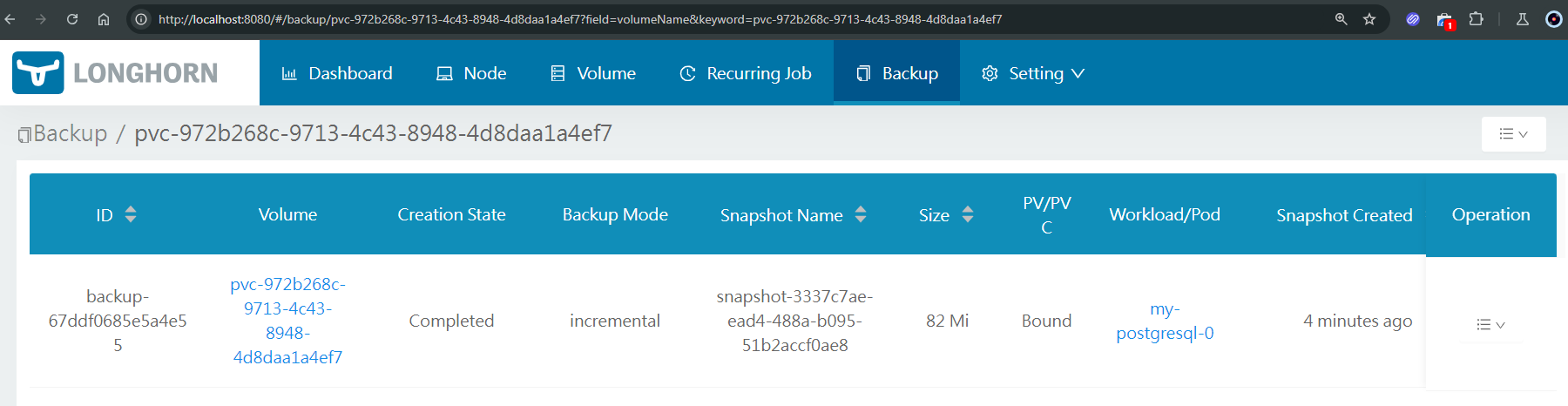

Also, the backups requested by velero via the CSI snapshots CRDs should be accessible via the longhorn web ui.

- Delete the namespace

kubectl delete namespace postgres

Check the PVs: the one previously bound to the deleted PVC is now marked Released.

kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-972b268c-9713-4c43-8948-4d8daa1a4ef7 200Mi RWO Retain Released postgres/data-my-postgresql-0 longhorn <unset> 46h

- Restore the backup and check its status.

velero restore create --from-backup postgres0

velero restore describe postgres0-20250113125808 --details

Name: postgres0-20250113125808

Namespace: velero

Labels: <none>

Annotations: <none>

Phase: Completed

Total items to be restored: 22

Items restored: 22

Started: 2025-01-13 12:58:08 +0100 CET

Completed: 2025-01-13 12:58:14 +0100 CET

Warnings:

Velero: <none>

Cluster: could not restore, VolumeSnapshotContent "snapcontent-3337c7ae-ead4-488a-b095-51b2accf0ae8" already exists. Warning: the in-cluster version is different than the backed-up version

Namespaces:

postgres: could not restore, ConfigMap "kube-root-ca.crt" already exists. Warning: the in-cluster version is different than the backed-up version

Backup: postgres0

Namespaces:

Included: all namespaces found in the backup

Excluded: <none>

Resources:

Included: *

Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io, csinodes.storage.k8s.io, volumeattachments.storage.k8s.io, backuprepositories.velero.io

Cluster-scoped: auto

Namespace mappings: <none>

Label selector: <none>

Or label selector: <none>

Restore PVs: auto

CSI Snapshot Restores:

postgres/data-my-postgresql-0:

Snapshot:

Snapshot Content Name: velero-data-my-postgresql-0-r9fr9-5cjpj

Storage Snapshot ID: bak://pvc-972b268c-9713-4c43-8948-4d8daa1a4ef7/backup-67ddf0685e5a4e55

CSI Driver: driver.longhorn.io

Existing Resource Policy: <none>

ItemOperationTimeout: 4h0m0s

Preserve Service NodePorts: auto

Uploader config:

HooksAttempted: 0

HooksFailed: 0

Resource List:

apps/v1/ControllerRevision:

- postgres/my-postgresql-7bd6c56b94(created)

apps/v1/StatefulSet:

- postgres/my-postgresql(created)

discovery.k8s.io/v1/EndpointSlice:

- postgres/my-postgresql-h8xt5(created)

- postgres/my-postgresql-hl-ztr9p(created)

networking.k8s.io/v1/NetworkPolicy:

- postgres/my-postgresql(created)

policy/v1/PodDisruptionBudget:

- postgres/my-postgresql(created)

snapshot.storage.k8s.io/v1/VolumeSnapshot:

- postgres/velero-data-my-postgresql-0-r9fr9(created)

snapshot.storage.k8s.io/v1/VolumeSnapshotClass:

- velero-longhorn-backup-vsc(skipped)

snapshot.storage.k8s.io/v1/VolumeSnapshotContent:

- snapcontent-3337c7ae-ead4-488a-b095-51b2accf0ae8(failed)

v1/ConfigMap:

- postgres/kube-root-ca.crt(failed)

v1/Endpoints:

- postgres/my-postgresql(created)

- postgres/my-postgresql-hl(created)

v1/Namespace:

- postgres(created)

v1/PersistentVolume:

- pvc-972b268c-9713-4c43-8948-4d8daa1a4ef7(skipped)

v1/PersistentVolumeClaim:

- postgres/data-my-postgresql-0(created)

v1/Pod:

- postgres/my-postgresql-0(created)

v1/Secret:

- postgres/my-postgresql(created)

- postgres/sh.helm.release.v1.my-postgresql.v1(created)

v1/Service:

- postgres/my-postgresql(created)

- postgres/my-postgresql-hl(created)

v1/ServiceAccount:

- postgres/default(skipped)

- postgres/my-postgresql(created)

A new PV will be created from the backup. The previous one will still be marked Released and can be deleted manually.

The restored pod will bind to the new, restored PV via a new PVC.

Sources

- https://picluster.ricsanfre.com/docs/backup/#enable-csi-snapshots-support-in-k3s

- https://longhorn.io/docs/1.7.2/snapshots-and-backups/csi-snapshot-support/enable-csi-snapshot-support/

- https://longhorn.io/docs/1.7.2/snapshots-and-backups/csi-snapshot-support/csi-volume-snapshot-associated-with-longhorn-backup/

- https://longhorn.io/docs/1.7.2/snapshots-and-backups/csi-snapshot-support/csi-volume-snapshot-associated-with-longhorn-snapshot/

- https://kubernetes.io/docs/concepts/storage/volume-snapshot-classes/